cv_paper_reviews

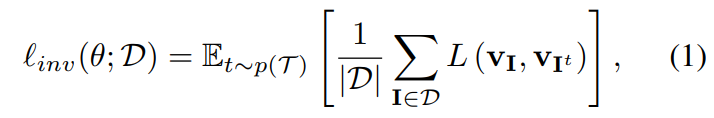

1.PIRL Review

많은 연구에서 pretext taks들은 이미지가 변형됨에 따라 대표 특징들이 변형되었다.이 논문에서는 이미지가 변형되어도 축출된 대표 특징들이 변화되지 않는 PIRL 진행기존의 이미지 인식 모델은 라벨링된 데이터나 바운딩 박스를 이용 -> long-tail에서 좋은

2.Attention is all you need(Transfomer) Review

0\. Abstract

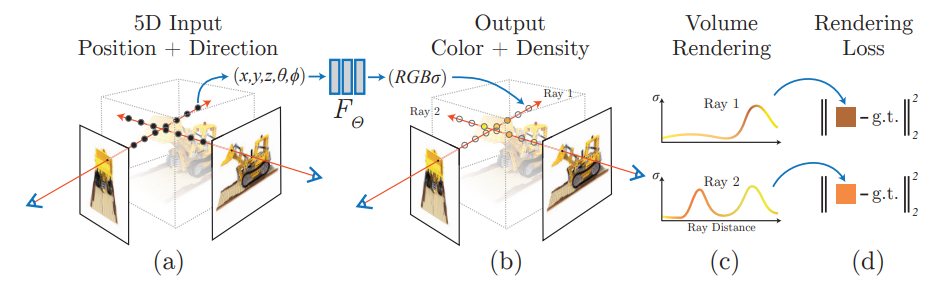

3.NeRF:Representing Scences as Neural Radiance Fields for View Synthesis Review

0. Abstract

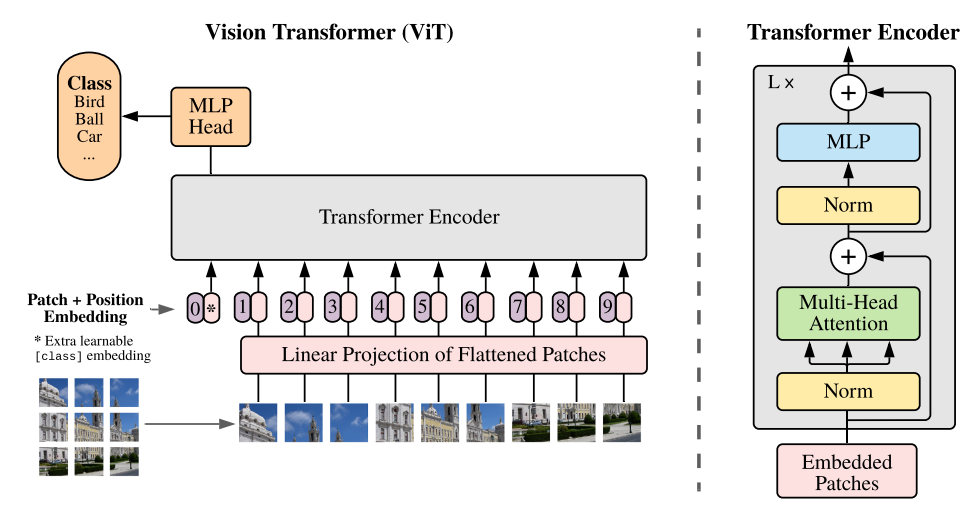

4.Vision Transformer(ViT) Review

참고

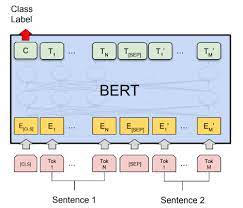

5.BERT Review

참조

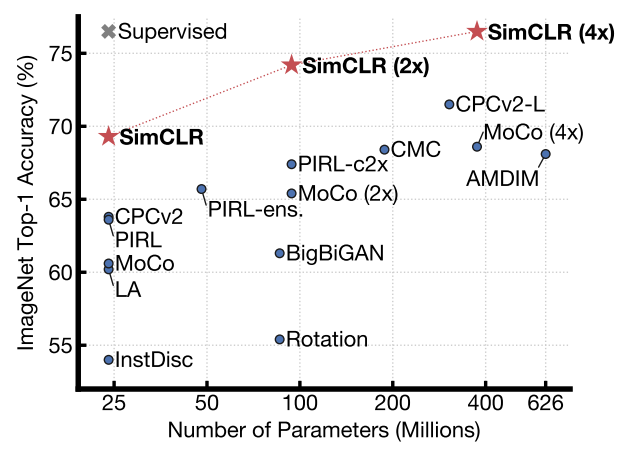

6.SimCLR review

참조

7.Unsupervised Feature Learning via Non-Parametric Instance Discrimination(NPID) Review

이 논문의 주제는 ImageNet의 object recognition에서 나왔다고 한다. Figure 1에서 볼 수 있듯이 레오파드에 대한 top-5 classification error를 보면, 레오파드와 비슷하게 생긴 제규어나, 치타와 같은 동물들의 softmax값

8.Momentum Contrast for Unsupervised Visual Representation Learning(MoCo) Review

1. Abstract & Introduction 이 논문은 dynamic dictionary(queue)와 contrastive loss를 이용한 이미지에서의 unsupervised learning을 실행하였다. 여기서 unsupervised learning은 dyna

9.GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis Review

adf

10.Review: Two-Stream Convolutional Networks for Action Recognition in Videos

1. Introduction In this paper, it tried to use CNN for recognizing human action which containing sptial and temporal information Architecture is based

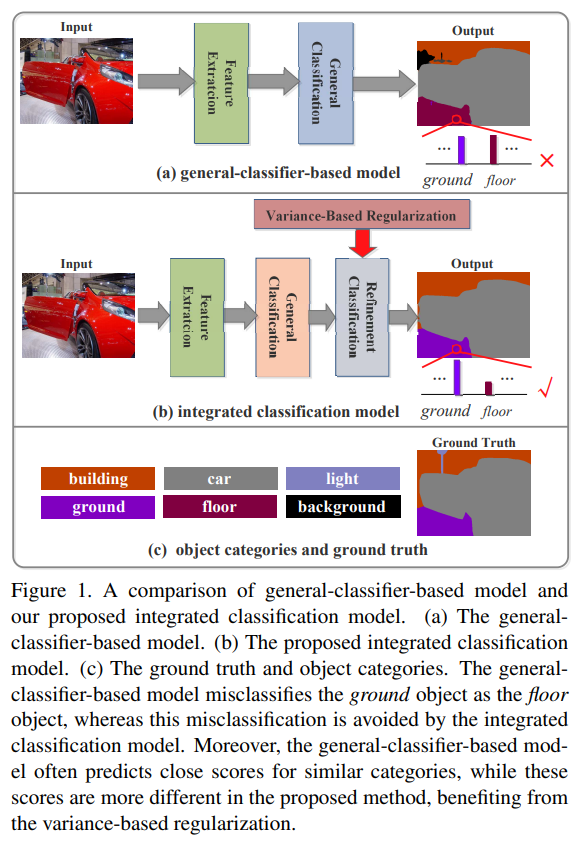

11.Review: Scene Parsing via Integrated Classification Model and Variance-Based Regularization ( incomplete )

Scene parsingScene parsing is to segment and parse an image into different image region associated with semantic catgories.Most of scene parsing model

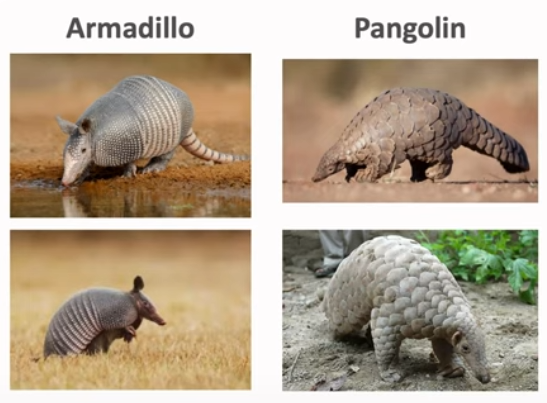

12.Few Shot Learning

Human can recognize that the query is pangliln based on difference between four images, but it is chellenging for computer because there are a few ima

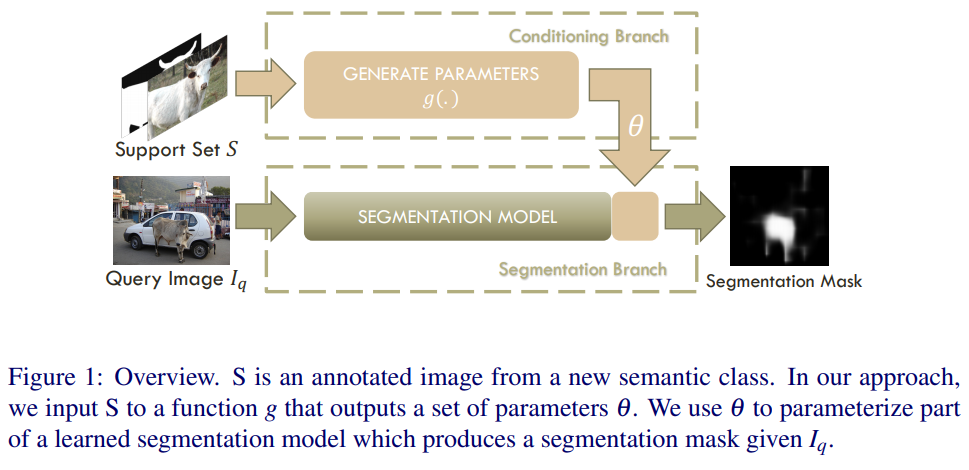

13.Review: One-shot learning for semantic segmentation

In this paper, it proposed semantic segmentation with one-shot learning which is pixel-level prediction with a single image and it's mask.A simple imp

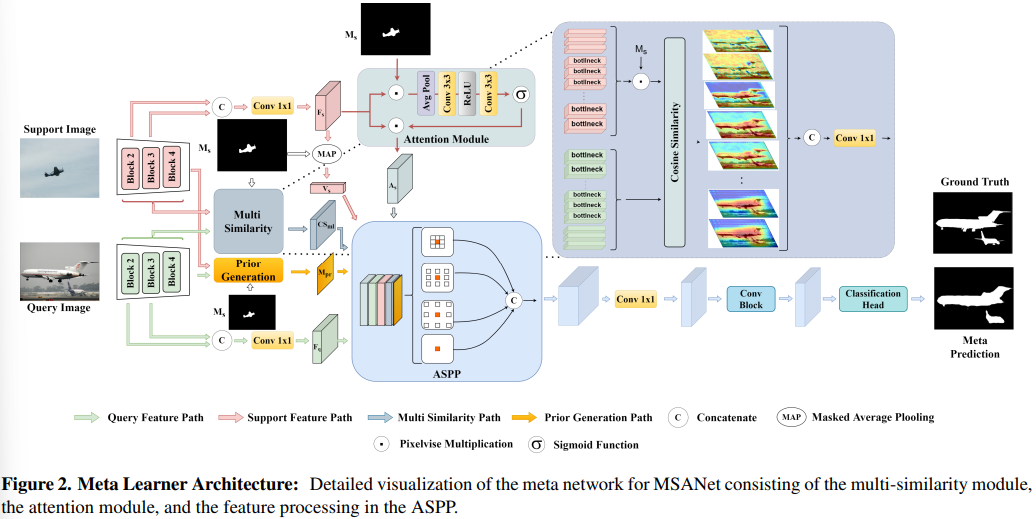

14.Review] Multi-Similarity and Attention Guidance for Boosting Few-Shot Segmentation(MSANet)

The problem of traditonal supervised CNN is that it needs the number of well-annotated data, the balance of class distribution and sample representati

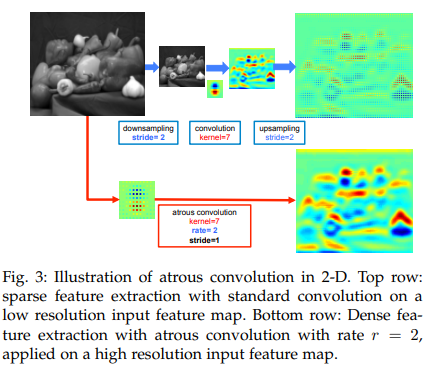

15.DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs (Review)

1. Motivation There are three challenges in semantic segmantation with DCNN. (1) reduced feature resolution, (2) existence of objects at multiple scal

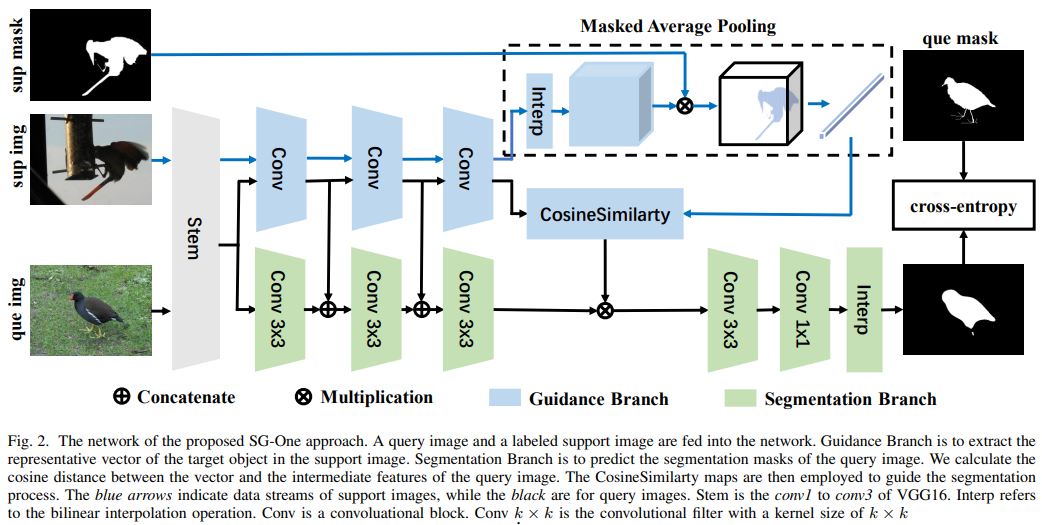

16.Review] Sg-one: Similarity guidance network for one-shot semantic segmentation

Traditional segmentation like Unet, FCN, extras require many loads for labeling tasks. To reduce the budget, one-shot segmentation is appliedThe one-s

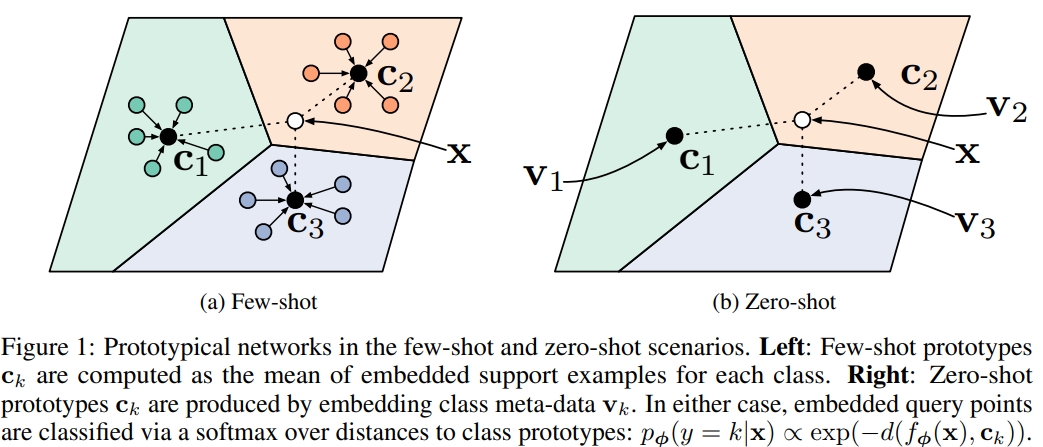

17.Review] Prototypical Networks for Few-shot Learning

Traditional approaches of neural networks have problem that need abundant amount of data. To overcome such problem, few-shot learning is proposed whic

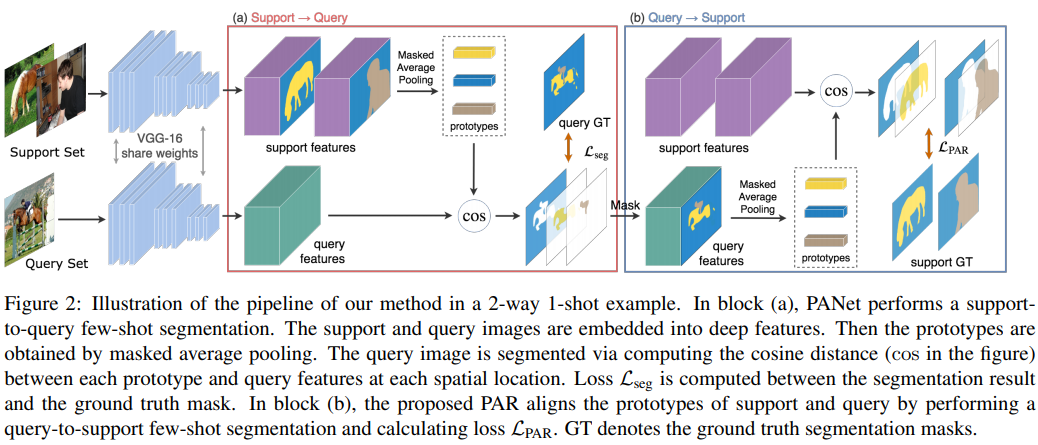

18.Review] PANet: Few-Shot Image Semantic Segmentation with Prototype Alignment

Previous few-shot segmentation method do not differentiate the feature extraction of the target object in the support set and segmentation process of

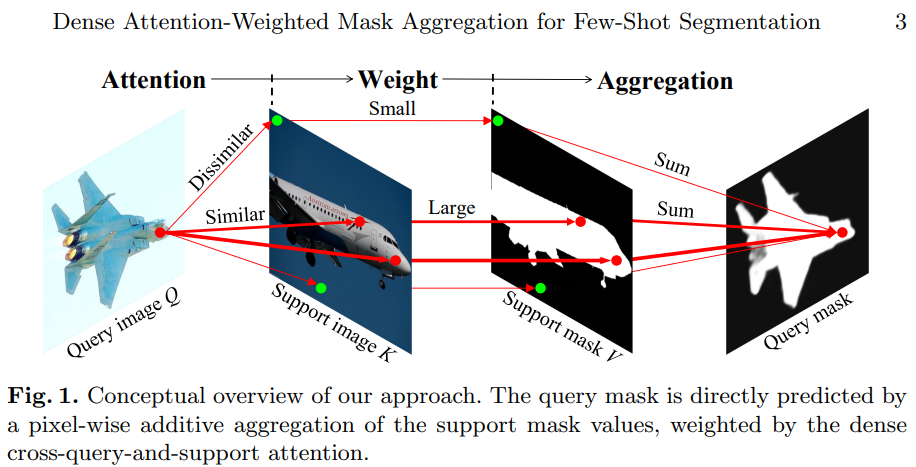

19.Reveiw] Dense Cross-Query-and-Support Attention Weighted Mask Aggregation for Few-Shot Segmentation - incomplete

1. Motivation

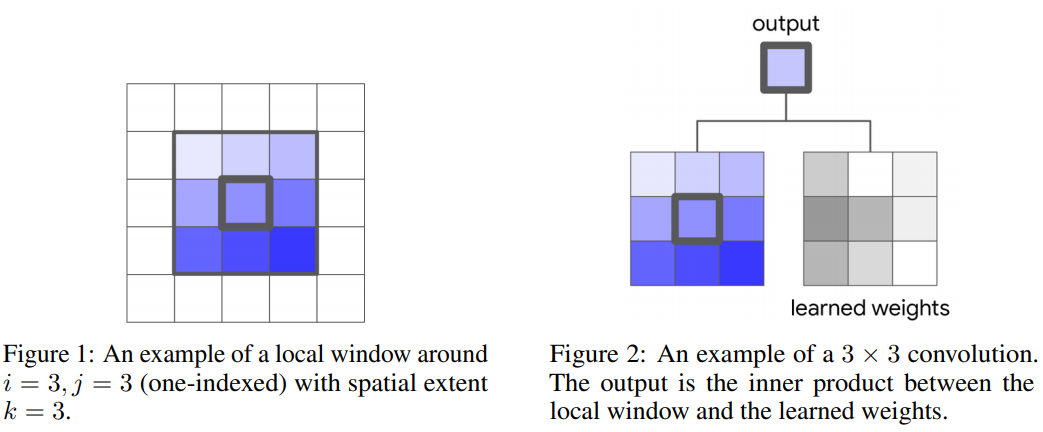

20.Review] Stand-Alone Self-Attention in Vision Models

Architecture of CNN has out-standing performance in computer vision applications. However, capturing long term arange interactions for convolutions is

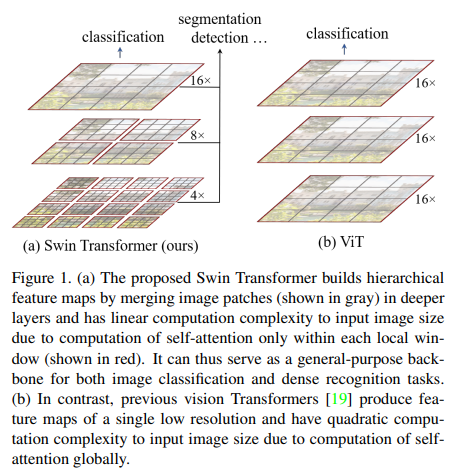

21.Review] Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

The transformer structure shows that adaptation of attention module can outperform traditional CNN-based model.However the transformer has a problem t

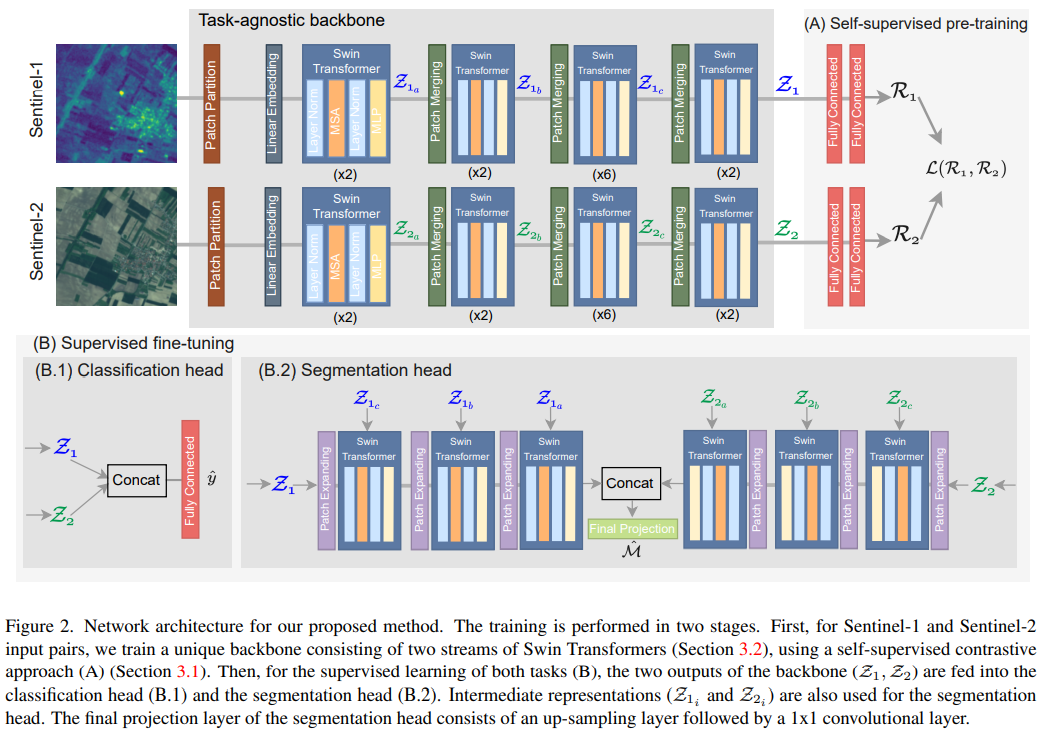

22.Review] Self-supervised Vision Transformers for Land-cover Segmentation and Classification

In this paper, it proposed the method to combine vision transformer architecture and self-supervied learning Overall structureThis work proposed two